SwiftTry: Fast and Consistent Video Virtual Try-On with Diffusion Models

Abstract

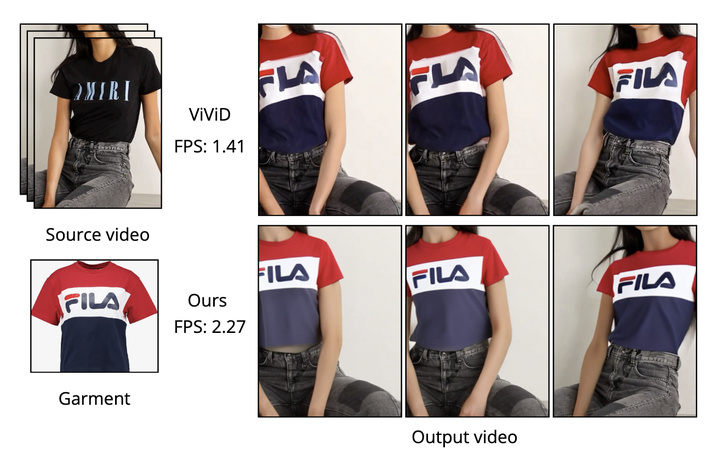

Given an input video of a person and a new garment, the goal of this paper is to synthesize a new video of that person wearing the specified clothing item while maintaining spatio-temporal consistency. Although significant progress has been made in image-based virtual try-on, extending this success to videos often leads to inconsistencies across frames. Several approaches have tried to address temporal consistency in video virtual try-on, but they encounter major challenges when dealing with long video sequences, including temporal incoherence and high computational costs. To tackle these issues, we reconceptualize video virtual try-on as a conditional video inpainting task, using garments as input conditions. In specific, our approach extends image diffusion models with temporal modules to enhance temporal awareness. To overcome high computational cost on long videos, we introduce ShiftCaching, a training-free technique that ensures temporal coherence while reducing redundant computations. Additionally, we present a new video try-on dataset, the TikTokDress dataset, which includes more complex backgrounds, challenging movements, and higher resolution than existing public datasets. Extensive experiments demonstrate that our approach significantly outperforms existing baselines in both the qualitative and quantitative aspects.